In the coming days, Matthias will be releasing our Expected Goals 2.0 statistics for 2014. You can find the 2013 version already uploaded here. I would imagine that basically everything I've been tweeting out from our @AnalysisEvolved twitter handle about expected goals up to this point will be certainly less cool, but he informs me it won't be entirely obsolete. He'll explain when he presents it, but the concept behind the new metrics are familiar, and there is a reason why I use xGF to describe how teams performed in their attempt to win a game. It's important to understand that there is a difference between actual results and expected goals, as one yields the game points and the other indicates possible future performances.

However, this post isn't about expected goal differential anyway--it's about expected goals for. Offense. This obviously omits what the team did defensively (and that's why xGD is so ideal in quantifying a team performance), but I'm not all about the team right now. These posts are about clubs' ability to create goals through the quality of their shots. It's a different method of measurement than that of PWP, and really it's a measuring something completely different.

Take for instance the game which featured Columbus beating Philadelphia on a couple of goals from Bernardo Anor, who aside from those goals turned in a great game overall and was named Chris Gluck's attacking player of the week. That said, know that the goals that Anor scored are not goals that can be consistently counted upon in the future. That's not to diminish the quality or the fact that they happened. It took talent to make both happen. They're events---a wide open header off a corner and a screamer from over 25 yards out---that I wouldn't expect him to replicate week in and week out.

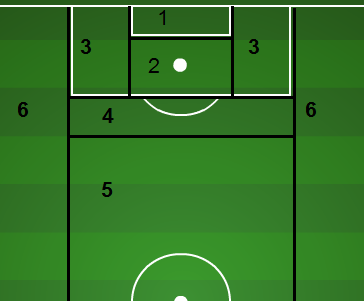

Obviously Columbus got some shots and in good locations which they capitalized on, but looking at the xGF metric tells us that while they scored two goals and won the match, the average shot taker would have produced just a little more than one expected goal. Their opponents took a cumulative eleven shots inside the 18 yard box, which we consider to be a dangerous location. Those shots, plus the six from long range, add up to nearly two goals worth of xGF. What this can tell us is two pretty basic things 1) Columbus scored a lucky goal somewhere (maybe the 25 yard screamer?) and then 2) They allowed a lot of shots in inopportune locations and were probably lucky to come out with the full 3 points.

Again, if you are a Columbus Crew fan and you think I'm criticizing your team's play, I'm not doing that. I'm merely looking at how many shots they produced versus how many goals they scored and telling you what would probably happen the majority of the time with those specific rates.

| Team |

shot1 |

shot2 |

shot3 |

shot4 |

shot5 |

shot6 |

Shot-total |

xGF |

| Chicago |

1 |

3 |

3 |

3 |

3 |

0 |

13 |

1.283 |

| Chivas |

0 |

3 |

2 |

2 |

3 |

0 |

10 |

0.848 |

| Colorado |

1 |

4 |

4 |

2 |

1 |

1 |

13 |

1.467 |

| Columbus |

0 |

5 |

1 |

2 |

1 |

0 |

9 |

1.085 |

| DC |

0 |

0 |

1 |

1 |

4 |

0 |

6 |

0.216 |

| FC Dallas |

0 |

6 |

2 |

0 |

1 |

1 |

10 |

1.368 |

| LAG |

0 |

0 |

4 |

2 |

3 |

0 |

9 |

0.459 |

| Montreal |

2 |

4 |

5 |

8 |

7 |

0 |

26 |

2.27 |

| New England |

1 |

2 |

1 |

8 |

5 |

0 |

17 |

1.275 |

| New York |

2 |

4 |

2 |

0 |

2 |

0 |

10 |

1.518 |

| Philadelphia |

2 |

5 |

6 |

2 |

4 |

0 |

19 |

2.131 |

| Portland |

0 |

0 |

2 |

2 |

2 |

1 |

7 |

0.329 |

| RSL |

0 |

4 |

3 |

0 |

3 |

0 |

10 |

0.99 |

| San Jose |

0 |

2 |

0 |

0 |

3 |

0 |

5 |

0.423 |

| Seattle |

1 |

4 |

0 |

2 |

2 |

0 |

9 |

1.171 |

| Sporting |

2 |

6 |

2 |

2 |

3 |

2 |

17 |

2.071 |

| Toronto |

0 |

6 |

4 |

2 |

2 |

0 |

14 |

1.498 |

| Vancouver |

0 |

1 |

1 |

3 |

3 |

0 |

8 |

0.476 |

| Team |

shot1 |

shot2 |

shot3 |

shot4 |

shot5 |

shot6 |

Shot-total |

xGF |

Now we've talked about this before, and one thing that xGF, or xGD for that matter, doesn't take into account is Game States---when the shot was taken and what the score was. This is something that we want to adjust for in future versions, as that sort of thing has a huge impact on the team strategy and the value of each shot taken and allowed. Looking around at other instances of games like that of Columbus, Seattle scored an early goal in their match against Montreal, and as mentioned, it changed their tactics. Yet despite that, and the fact that the Sounders only had 52 total touches in the attacking third, they were still able to average a shot per every 5.8 touches in the attacking third over the course of the match.

It could imply a few different things. Such as it tells me that Seattle took advantage of their opportunities in taking shots and even with allowing of so many shots they turned those into opportunities for themselves. They probably weren't as over matched it might seem just because the advantage that Montreal had in shots (26) and final third touches (114). Going back to Columbus, it seems Philadelphia was similar to Montreal in the fact that both clubs had a good amount of touches, but it seems like the real difference in the matches is that Seattle responded with a good ratio of touches to shots (5.77), and Columbus did not (9.33).

These numbers don't contradict PWP. Columbus did a lot of things right, looked extremely good, and dare I say they make me look rather brilliant for picking them at the start of the season as a possible playoff contender. That said their shot numbers are underwhelming and if they want to score more goals they are going to need to grow a set and take some shots.

| Team |

att passes C |

att passes I |

att passes Total |

Shot perAT |

Att% |

KP |

| Chicago |

26 |

17 |

43 |

3.308 |

60.47% |

7 |

| Chivas |

32 |

29 |

61 |

6.100 |

52.46% |

2 |

| Colorado |

58 |

27 |

85 |

6.538 |

68.24% |

7 |

| Columbus |

53 |

31 |

84 |

9.333 |

63.10% |

5 |

| DC |

61 |

45 |

106 |

17.667 |

57.55% |

3 |

| FC Dallas |

34 |

26 |

60 |

6.000 |

56.67% |

2 |

| LAG |

43 |

23 |

66 |

7.333 |

65.15% |

6 |

| Montreal |

63 |

51 |

114 |

4.385 |

55.26% |

11 |

| New England |

41 |

29 |

70 |

4.118 |

58.57% |

7 |

| New York |

57 |

41 |

98 |

9.800 |

58.16% |

6 |

| Philadelphia |

56 |

29 |

85 |

4.474 |

65.88% |

10 |

| Portland |

10 |

9 |

19 |

2.714 |

52.63% |

3 |

| RSL |

54 |

32 |

86 |

8.600 |

62.79% |

3 |

| San Jose |

37 |

20 |

57 |

11.400 |

64.91% |

3 |

| Seattle |

33 |

19 |

52 |

5.778 |

63.46% |

5 |

| Sporting |

47 |

29 |

76 |

4.471 |

61.84% |

7 |

| Toronto |

30 |

24 |

54 |

3.857 |

55.56% |

6 |

| Vancouver |

21 |

20 |

41 |

5.125 |

51.22% |

2 |

| Team |

att passes C |

att passes I |

att passes Total |

ShotpT |

Att% |

KP |

There is a lot more to comment on than just Columbus/Philadelphia and Montreal/Seattle (Hi Portland and your 19 touches in the final third!). But these are the games that stood out to me as being analytically awkward when it comes to the numbers that we produce with xGF, and I thought they were good examples of how we're trying to better quantify the the game. It's not that we do it perfect---and the metric is far from perfect---instead it's about trying to get better and move forward with this type of analysis, opposed to just using some dried up cliché to describe a defense, like "that defense is made of warriors with steel plated testicles" or some other garbage.

This is NUUUUUuuuuummmmmbbbbbbeeerrrs. Numbers!